This piece follows on from our previous blog in which we gave you a top-level view of Conversion Rate Optimisation (CRO) and explained the benefits of applying A/B testing.

Now, we want to illustrate why it’s important when conducting a CRO test that there’s a purpose to the experiment - and the results will help support your hypothesis.

A CRO test needs to be constructed from a series of validated research methods and data to help emphasise and back the theory.

Using data gathered from web analysis, we can decide the experiment changes and what the objectives and goals will be based on to determine success.

Google Analytics (GA)

Behaviour analytics recording

UX and Design input

How does it work?

Depending on our hypothesis, and the possible changes listed in the experiment, we would then propose a certain style of test.

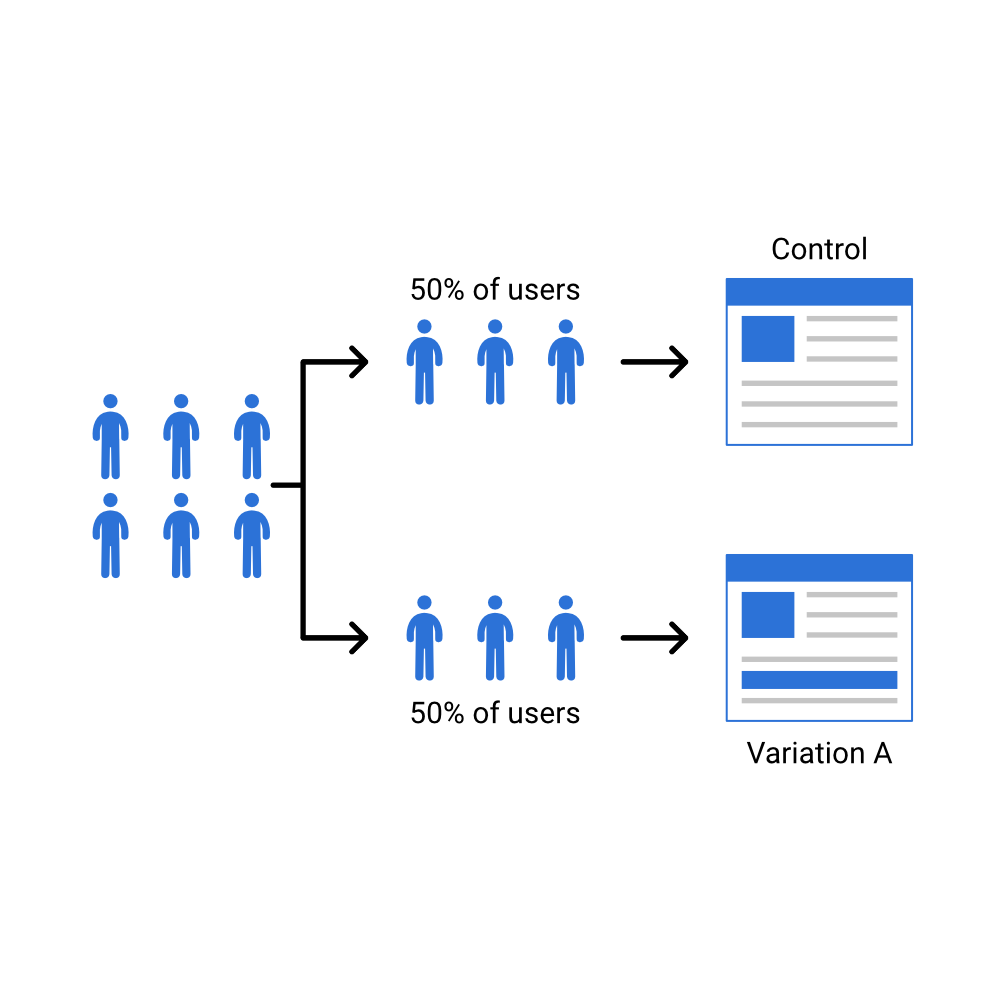

If an experiment has more than one variant, then the traffic would be split in accordance with the number of different variations (including the control).

A/B split testing

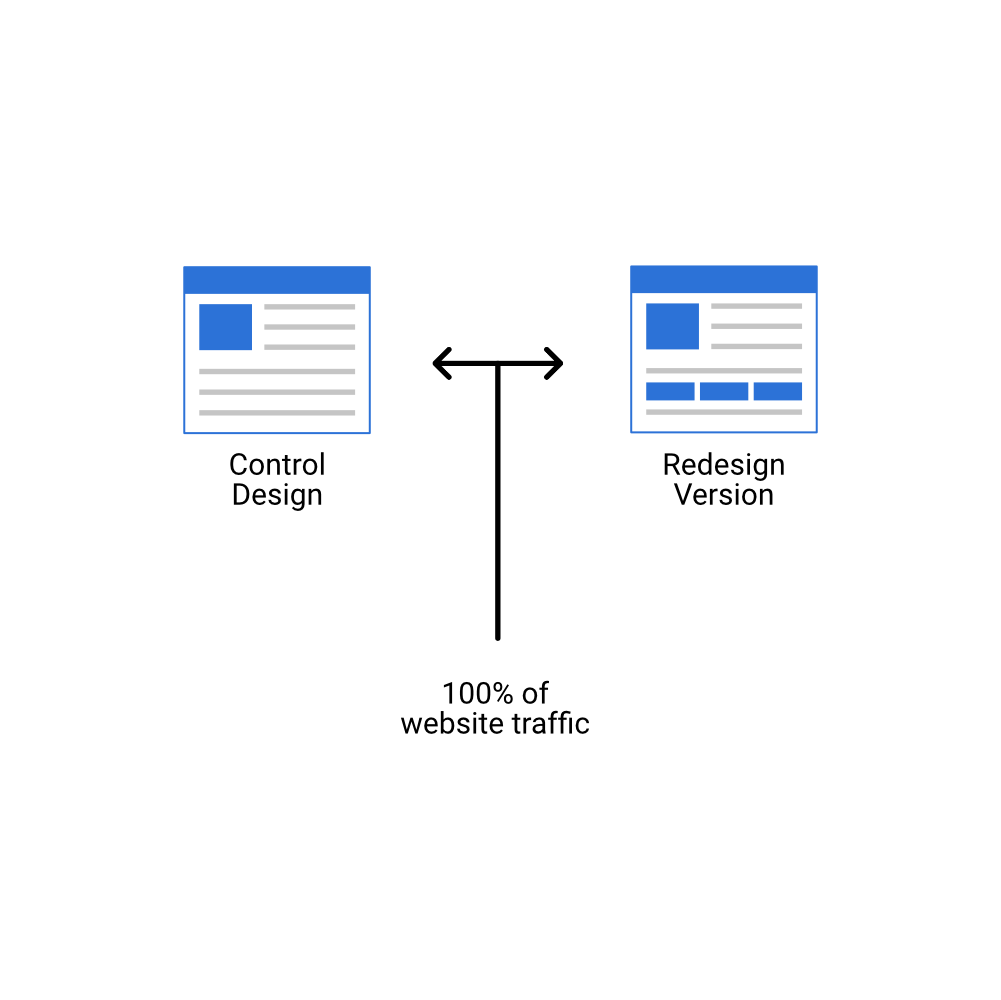

This involves testing a different version of a website to the original by making changes to either the content, website layout, or perhaps adding a button to help drive the audience further into the journey.

The test could be scaled up to a complete page redesign, if the data proves that a revamp of the site will increase conversion rates.

Having something as simple as a sticky mobile search bar on the landing page could help users navigate and create a quicker journey to making a purchase.

Multivariate test

Multivariate testing is when more than one change is tested at the same time, creating several versions to find the best combination.

This is ideal for pages with very high activity (over 50k pageviews).

Multivariate testing can eliminate the need to run several sequential A/B tests by running them at the same time.

As more changes are applied, more combinations are created to scale smaller segments (quarters, sixths eighths etc).

Changing the scale of the combination will impact the duration of the test to ensure a fair amount of traffic is being sent before a combination can be validated.

Examples of this could be testing text and visual elements on a webpage together, combining new functionality with a re-arranged page layout.

Operative test

Some changes cannot be made by simply modifying an existing page. Updating a form field so that it’s no longer mandatory could have consequences if the website cannot process the update.

An operative test allows a brand-new page to mirror the current page and correctly process the next action of the site. Users are redirected to the new version of the page, and some development time would be needed to ensure the changes do not impact the site’s content management system.

The outcome

As more traffic is being sent to the live CRO experiment, the test will determine which version of the webpage is the most efficient in terms of goals and conversion rates and give an overall probability of which is the best.

By comparing data, we can make informed decisions on whether the ‘change’ fairs better and if it provides a positive effect on the user journey.

A negative impact is just as important to help us understand why the hypothesis was incorrect, and how the website performed overall.

Key learning can help later on by reminding us of the impact of tests and how we could make improvements.

.png)